Last week I talked to a friend about putting some real-time data on my website, primarily for novelty but also because I thought it was a neat ideal for a very low-level solution.

I am building a way to capture some real-time data, getting it over to a server in a secure fashion and figuring out how to present it with some consideration for latency and bandwidth. Here's an account of how I built this live feed.

Design

My primary objective with the live video was to blend into the existing aesthetic of the website and immediately get out of the way when you continue browsing the website.

All it's meant to be is a quirky detail.

Choices like making it a background feed just on the homepage and fading it out as you scroll down to the content made it less obnoxious.

You also wanted to be privacy conscious when building something like this, which is why the feed is from my workspace, low-resolution, grayscale, streamed at a reasonably low frame-rate and can be easily paused without breaking the experience on the website.

Hardware

To learn more about the hardware development tools I used for this project, look here.

The camera is low-quality, but that's ideal for this purpose. A lower-quality, somewhat fuzzy image is less distracting and hides a lot of detail. I wrote a small program for the chip that utilized the camera frame buffer to capture an image at a set interval, crop it, and send it to a microservice to serve to the website.

#include <Arduino.h>

#include <WiFi.h>

#include <WiFiClientSecure.h>

#include "soc/soc.h"

#include "soc/rtc_cntl_reg.h"

#include "esp_camera.h"

// ESP-32 onboard LED

#define ONBOARD_LED 2

// WiFi

const char *ssid = "Wifi-name";

const char *password = "the-password";

// Server and endpoint you're posting images to

String serverName = "example.udara.io";

String serverPath = "/upload";

const int serverPort = 443;

// Time between each HTTP POST image

const int timerInterval = 2000;

unsigned long previousMillis = 0;

// Camera pin defintions

// You can find the pin definitions for different models

// of cameras online (just google it).

// In this case I'm using the CAMERA_MODEL_WROVER_KIT

#define PWDN_GPIO_NUM -1

#define RESET_GPIO_NUM -1

#define XCLK_GPIO_NUM 21

#define SIOD_GPIO_NUM 26

#define SIOC_GPIO_NUM 27

#define Y9_GPIO_NUM 35

#define Y8_GPIO_NUM 34

#define Y7_GPIO_NUM 39

#define Y6_GPIO_NUM 36

#define Y5_GPIO_NUM 19

#define Y4_GPIO_NUM 18

#define Y3_GPIO_NUM 5

#define Y2_GPIO_NUM 4

#define VSYNC_GPIO_NUM 25

#define HREF_GPIO_NUM 23

#define PCLK_GPIO_NUM 22

// This is the root certificate for the server, in our case, it's one of the Let's Encrypt public certificates.

// You can get this by running a openssl query against the domain you're connecting to view the certificate chain.

// (e.g. openssl s_client -showcerts -connect example.com:443)

const static char *test_root_ca PROGMEM =

"-----BEGIN CERTIFICATE-----\n"

"ADD YOUR ACTUAL SSL CERT HERE\n"

"-----END CERTIFICATE-----\n";

WiFiClientSecure client;

// Takes images from the camera, connects to the server using the

// wifi client and uploads the images by making a post request to the server.

String uploadImage()

{

String getAll;

String getBody;

// Make the image black and white

sensor_t *s = esp_camera_sensor_get();

s->set_special_effect(s, 2);

// Get the frame buffer for the camera

camera_fb_t *framebuf = NULL;

framebuf = esp_camera_fb_get();

if (!framebuf)

{

Serial.println("Camera failed to initialize");

delay(2500);

ESP.restart();

}

Serial.println("Connecting to: " + serverName);

if (client.connect(serverName.c_str(), serverPort))

{

Serial.println("Connected to server");

String head = "--LMR\r\nContent-Disposition: form-data; name=\"img\"; filename=\"latest.jpg\"\r\nContent-Type: image/jpeg\r\n\r\n";

String tail = "\r\n--LMR--\r\n";

uint32_t imageLen = framebuf->len;

uint32_t extraLen = head.length() + tail.length();

uint32_t totalLen = imageLen + extraLen;

client.println("POST " + serverPath + " HTTP/1.1");

client.println("Host: " + serverName);

// Include an additonal authorization header if required by your microservice

client.println("Authorization: Bearer AUTH TOKEN HERE");

client.println("Content-Length: " + String(totalLen));

client.println("Content-Type: multipart/form-data; boundary=LMR");

client.println();

client.print(head);

// Send the image data to the server

// We do this by writing the data from the frame buffer to the client

uint8_t *fbBuf = framebuf->buf;

size_t fbLen = framebuf->len;

for (size_t n = 0; n < fbLen; n = n + 1024)

{

if (n + 1024 < fbLen)

{

client.write(fbBuf, 1024);

fbBuf += 1024;

}

else if (fbLen % 1024 > 0)

{

size_t remainder = fbLen % 1024;

client.write(fbBuf, remainder);

}

}

client.print(tail);

// Return the frame buffer

esp_camera_fb_return(framebuf);

int timoutTimer = 10000;

long startTimer = millis();

boolean state = false;

// Wait for the response from the server

while ((startTimer + timoutTimer) > millis())

{

Serial.print(".");

delay(100);

while (client.available())

{

char c = client.read();

if (c == '\n')

{

if (getAll.length() == 0)

{

state = true;

}

getAll = "";

}

else if (c != '\r')

{

getAll += String(c);

}

if (state == true)

{

getBody += String(c);

}

startTimer = millis();

}

if (getBody.length() > 0)

{

break;

}

}

Serial.println();

client.stop();

Serial.println(getBody);

}

else

{

getBody = "Connection to " + serverName + " timed out.";

Serial.println(getBody);

}

return getBody;

}

// Inital setup

void setup()

{

pinMode(ONBOARD_LED, OUTPUT);

WRITE_PERI_REG(RTC_CNTL_BROWN_OUT_REG, 0);

Serial.begin(115200);

client.setCACert(test_root_ca);

WiFi.mode(WIFI_STA);

Serial.println();

Serial.print("Connecting to ");

Serial.println(ssid);

WiFi.begin(ssid, password);

while (WiFi.status() != WL_CONNECTED)

{

Serial.print(".");

delay(500);

}

Serial.println();

Serial.print("Camera IP Address: ");

Serial.println(WiFi.localIP());

// Setup the camera using the pin definitions we set

camera_config_t config;

config.ledc_channel = LEDC_CHANNEL_0;

config.ledc_timer = LEDC_TIMER_0;

config.pin_d0 = Y2_GPIO_NUM;

config.pin_d1 = Y3_GPIO_NUM;

config.pin_d2 = Y4_GPIO_NUM;

config.pin_d3 = Y5_GPIO_NUM;

config.pin_d4 = Y6_GPIO_NUM;

config.pin_d5 = Y7_GPIO_NUM;

config.pin_d6 = Y8_GPIO_NUM;

config.pin_d7 = Y9_GPIO_NUM;

config.pin_xclk = XCLK_GPIO_NUM;

config.pin_pclk = PCLK_GPIO_NUM;

config.pin_vsync = VSYNC_GPIO_NUM;

config.pin_href = HREF_GPIO_NUM;

config.pin_sscb_sda = SIOD_GPIO_NUM;

config.pin_sscb_scl = SIOC_GPIO_NUM;

config.pin_pwdn = PWDN_GPIO_NUM;

config.pin_reset = RESET_GPIO_NUM;

config.xclk_freq_hz = 20000000;

config.pixel_format = PIXFORMAT_JPEG;

// Init with high specs to pre-allocate larger buffers

if (psramFound())

{

config.frame_size = FRAMESIZE_SVGA;

config.jpeg_quality = 10; // 0-63 lower number means higher quality

config.fb_count = 2;

}

else

{

config.frame_size = FRAMESIZE_CIF;

config.jpeg_quality = 12; // 0-63 lower number means higher quality

config.fb_count = 1;

}

// Initialize the camer

esp_err_t err = esp_camera_init(&config);

if (err != ESP_OK)

{

Serial.printf("Camera initialization failed with error 0x%x", err);

delay(1000);

ESP.restart();

}

uploadImage();

}

void loop()

{

unsigned long currentMillis = millis();

if (currentMillis - previousMillis >= timerInterval)

{

digitalWrite(ONBOARD_LED, HIGH);

uploadImage();

digitalWrite(ONBOARD_LED, LOW);

previousMillis = currentMillis;

}

}

The above code is an Arduino program that captures an image from a camera and posts it to a server using HTTP POST request over WiFi.

Header files: The program includes the required libraries for the project, including the Arduino.h, WiFi.h, and WiFiClientSecure.h libraries. It also includes two other header files related to the ESP32 camera module.

Constants: The program defines several constants, including pin definitions for the ESP32 camera module, the server name, server path, and server port.

WiFi and client setup: The WiFiClientSecure client is instantiated, and the root certificate for the server is loaded into the client. The WiFi module is set to WIFI_STA mode, and the device attempts to connect to the specified WiFi network.

Camera setup: The ESP32 camera module is initialized using the pin definitions set earlier.

Image capture and upload loop: The program enters an infinite loop where it captures an image from the camera module, converts it to black and white, and posts it to the server using an HTTP POST request. The process is repeated at a set interval defined by timerInterval.

Supporting functions: The uploadImage() function takes care of posting the image to the server using the client object. The function returns the response from the server after posting the image. The response is printed to the serial monitor. Another function, setup(), initializes the program and performs some basic checks before starting the image capture and upload loop. Finally, the loop() function runs continuously, checking the time elapsed since the last image capture and upload cycle.

Something to keep in mind with a project like this is that you never want to open up a direct connection to a camera or any device on your local network. It's also a good idea to assume that all the content you push to the server could be recorded and made public outside of the context of your project.

Client/Server Software

For the server, I picked Go to write a small microservice for its convenience and low-performance overhead. It's a simple HTTP server that receives images from the camera and stores a copy of the latest frame, it also serves the newest frame to my website to power the live feed.

When someone visits the site, the front-end will poll the API to fetch the latest frame and sequentially fade it into view, recreating the video-like feed. It's real-time to within about 10 seconds when active, and when I pause the camera, the website will loop through a pre-captured sequence of images.

v1

Here's my first attempt at a microservice written in Go, that listens for requests on port 80. The program is a pretty straight-forward. There are many details that go into writing a a good (/secure) server – this is just a weekend project, so we're doing the bare minimum.

package main

import (

"bytes"

"fmt"

"io"

"log"

"math/rand"

"net/http"

"os"

"strings"

"time"

)

type Status struct {

CreatedAt string `json:"CreatedAt"`

Image string `json:"Image"`

}

// Used to know if the stream is offline

// Offline if not active for 30 seconds

var lastStatusUpdate time.Time = time.Now()

// Used to store the latest image bytes

var lastImage bytes.Buffer

// Uploads the new image and replaces the /tmp/latest.png

func updateStatus(w http.ResponseWriter, r *http.Request) {

log.Println("Update status begin")

reqToken := r.Header.Get("Authorization")

if reqToken == "" {

log.Println("No authorization header")

fmt.Fprintf(w, "Invalid")

return

}

splitToken := strings.Split(reqToken, "Bearer ")

reqToken = splitToken[1]

const tkn string = "SOME_TOKEN"

if tkn != reqToken {

log.Println("Invalid authorization token")

fmt.Fprintf(w, "Invalid")

return

}

log.Println("Upload: Init")

// Limit to 10mb

r.ParseMultipartForm(10 << 20)

// Takes out the file in img field

file, handler, err := r.FormFile("img")

if err != nil {

log.Println("Upload: ", err)

fmt.Fprintf(w, "Failed")

return

}

defer file.Close()

buf := bytes.NewBuffer(nil)

if _, err := io.Copy(buf, file); err != nil {

return

}

log.Println("Buffer: ", *buf)

lastImage = *buf

lastStatusUpdate = time.Now()

fmt.Fprintf(w, "Success")

log.Println("Upload: Success", handler)

}

func getLatestImage(w http.ResponseWriter, r *http.Request) {

w.Header().Set("Content-Type", "image/jpeg")

w.Header().Set("Cache-Control", "no-cache, no-store, must-revalidate;")

w.Header().Set("pragma", "no-cache")

w.Header().Set("X-Content-Type-Options", "nosniff")

w.Write(lastImage.Bytes())

}

func getRandomImage(w http.ResponseWriter, r *http.Request) {

files, err := os.ReadDir("./loop/")

if err != nil {

log.Fatal(err)

}

randomIndex := rand.Intn(len(files))

randomImage := files[randomIndex]

buf, err := os.ReadFile("./loop/" + randomImage.Name())

if err != nil {

log.Fatal(err)

}

w.Header().Set("Content-Type", "image/jpeg")

w.Header().Set("Cache-Control", "no-cache, no-store, must-revalidate;")

w.Header().Set("pragma", "no-cache")

w.Header().Set("X-Content-Type-Options", "nosniff")

w.Write(buf)

}

func setLogFile() error {

path := "./monthly.log"

logFile, err := os.OpenFile(path, os.O_WRONLY|os.O_APPEND|os.O_CREATE, 0644)

if err != nil {

log.Fatal(err)

return err

}

log.SetOutput(logFile)

log.SetFlags(log.LstdFlags | log.Lshortfile | log.Lmicroseconds)

return nil

}

func latest(w http.ResponseWriter, r *http.Request) {

now := time.Now()

var offlineIfLastSeenBefore time.Time = now.Add(-10 * time.Second)

// If offline

if lastStatusUpdate.Before(offlineIfLastSeenBefore) {

getRandomImage(w, r)

} else {

getLatestImage(w, r)

}

}

func handleRequests() {

http.HandleFunc("/upload", updateStatus)

http.HandleFunc("/latest", latest)

// http.HandleFunc("/status", getStatus)

log.Fatal(http.ListenAndServe(":80", nil))

}

func window() {

err := setLogFile()

if err != nil {

log.Fatal(err)

}

log.Println("Log file set")

handleRequests()

}

My original approach to recreating a live feed using a sequence of images instead of creating an actual video live stream has a couple of pros and cons. Serving images from a server is less complicated than setting up a video stream. Additionally, I could host at least a couple thousand live viewers off a small server (2 vcpus, 2 gigs of memory).

Serving images that are ~20-30kb in size each at a frame rate of 30 frames per minute makes up about ~1Mb per minute in bandwidth. For reference, a video stream of roughly the same quality but at a much higher framerate only costs about ~2Mb per minute. Definitely room for improvement. For a future upgrade, I'm hoping to take a page out of video compression algorithms and see how far I can compress these individual frames or if there is a way for me to send diffs instead of full images.

v2

My second approach for handling the video stream is to serve a HLS feed. You can pipe a stream of images through ffmpeg to produce the video stream, and then serve it. I'll report back with how it goes in the future.

A couple of notes on enhancing privacy

As this Reddit user points out, sharing a live feed of yourself on the internet is not to be taken lightly. You could inadvertently leak information about you or others in your environment, so it's a good idea to consider these implications seriously.

I picked my workspace as the subject because I didn't mind working in public. It was also the type of presence I was aiming for aesthetically, but I'll likely point it somewhere else in the future.

If you've accepted the exposure and risk involved with live streaming, here are a few things I built into the streaming service that hope to enhance privacy further.

- The camera hardware is not accessible over the internet.

- Hardware shutoff such that the camera can be disabled physically. When the camera is not active, the website falls back to looping a pre-recorded sequence.

- The video is only sometimes live. The service will loop pre-recorded sequences at random intervals to discourage anyone from tracking any activity over time. While this means that the video isn't always live, it's a fair trade-off for additional privacy and safety.

- Minimize the number of elements in your stream that may allow someone to geolocate you. This means no landmarks, easily identifiable buildings, photos or items in the frame.

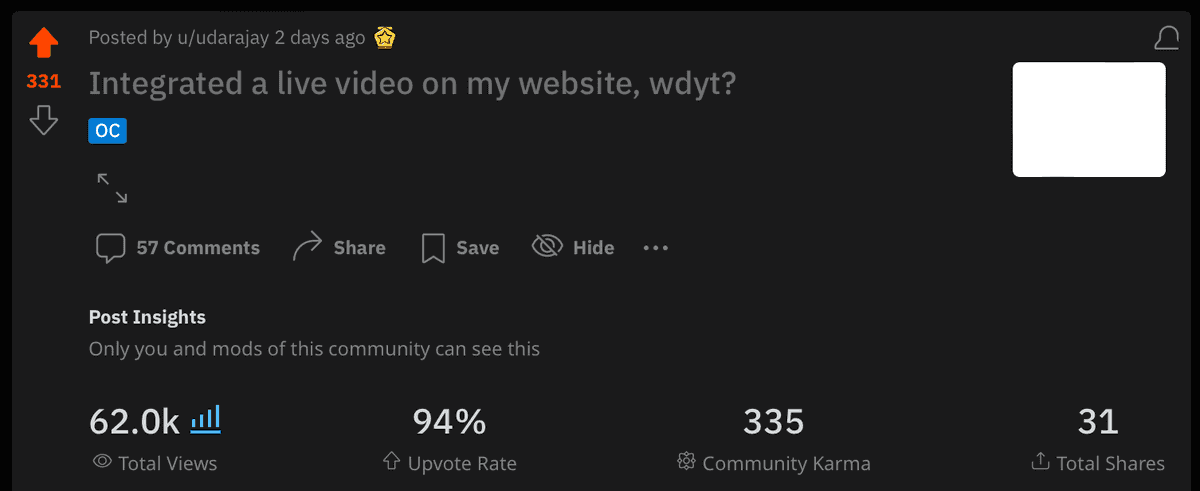

Some stats

It caught me by surprise how many folks were interested in this. You don't often have people sharing a casual stream outside of the context of places like Twitch or Youtube. In hindsight, I should've added a wave button so visitors could interact with the feed and say Hi.

- ~15,000 visitors on the site over the weekend

- At the peak there were close to 100 concurrent viewers

- ~40GB in bandwidth for the whole ordeal

That's all there is to it. If you end up building something similar or have any thoughts about this, I'd love to hear about it.